计算机视觉方面的三大顶级会议:ICCV,CVPR,ECCV.统称ICE

CVPR 2022文档图像分析与识别相关论文26篇汇集简介

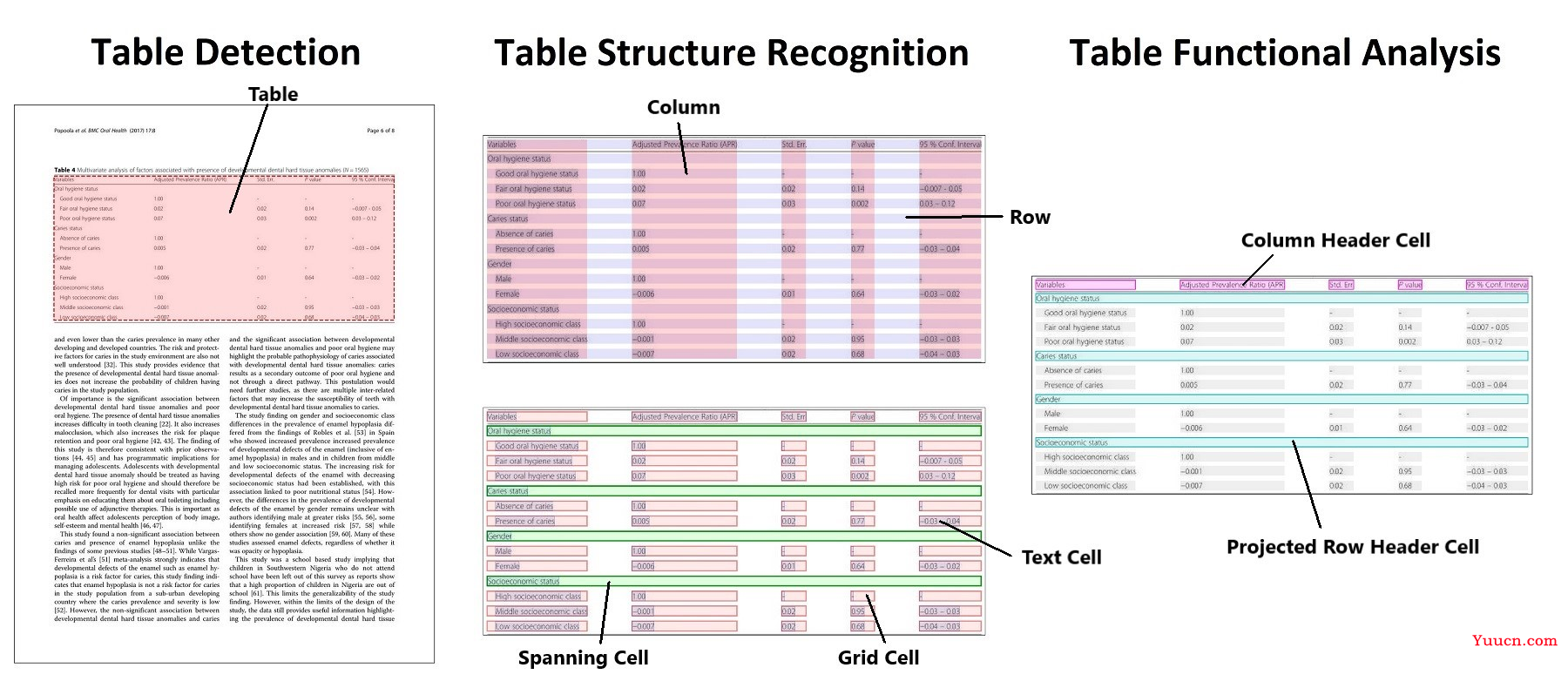

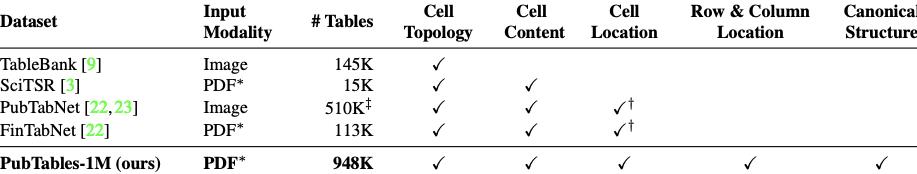

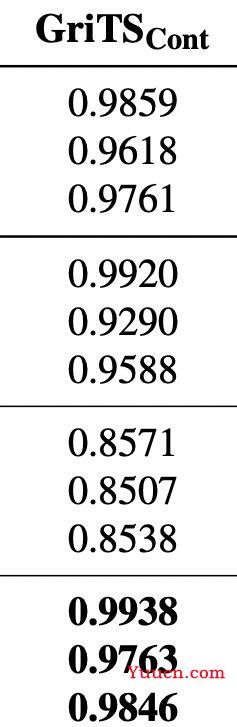

论文: PubTables-1M: Towards comprehensive table extraction from unstructured documents是发表于CVPR上的一篇论文

作者发布了两个模型,表格检测和表格结构识别。

论文讲解可以参考【论文阅读】PubTables- 1M: Towards comprehensive table extraction from unstructured documents

hugging face Table Transformer 使用文档

hugging face Table DETR 使用文档

检测表格

from huggingface_hub import hf_hub_download

from transformers import AutoImageProcessor, TableTransformerForObjectDetection

import torch

from PIL import Image

file_path = hf_hub_download(repo_id="nielsr/example-pdf", repo_type="dataset", filename="example_pdf.png")

image = Image.open(file_path).convert("RGB")

image_processor = AutoImageProcessor.from_pretrained("microsoft/table-transformer-detection")

model = TableTransformerForObjectDetection.from_pretrained("microsoft/table-transformer-detection")

inputs = image_processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

target_sizes = torch.tensor([image.size[::-1]])

results = image_processor.post_process_object_detection(outputs, threshold=0.9, target_sizes=target_sizes)[

0

]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

region = image.crop(box) #检测

region.save('xxx.jpg') #保存

# Detected table with confidence 1.0 at location [202.1, 210.59, 1119.22, 385.09]

![]()

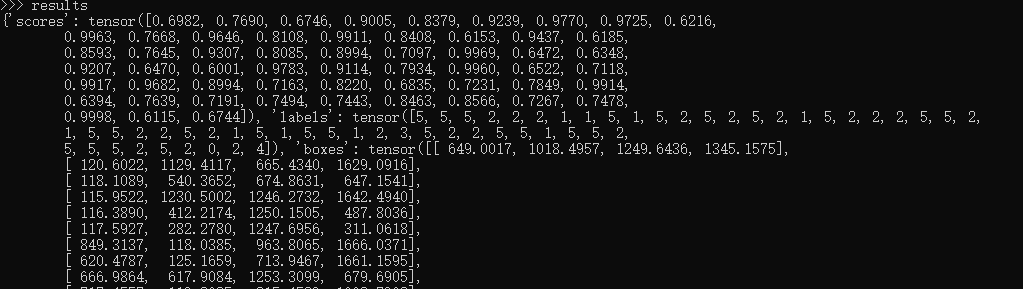

结果 :效果不错

表格结构识别

参考:https://github.com/NielsRogge/Transformers-Tutorials/blob/master/Table%20Transformer/Using_Table_Transformer_for_table_detection_and_table_structure_recognition.ipynb

import torch

from PIL import Image

from transformers import DetrFeatureExtractor

from transformers import AutoImageProcessor, TableTransformerForObjectDetection

from huggingface_hub import hf_hub_download

feature_extractor = DetrFeatureExtractor()

file_path = hf_hub_download(repo_id="nielsr/example-pdf", repo_type="dataset", filename="example_pdf.png")

image = Image.open(file_path).convert("RGB")

encoding = feature_extractor(image, return_tensors="pt")

model = TableTransformerForObjectDetection.from_pretrained("microsoft/table-transformer-structure-recognition")

with torch.no_grad():

outputs = model(**encoding)

target_sizes = [image.size[::-1]]

results = feature_extractor.post_process_object_detection(outputs, threshold=0.6, target_sizes=target_sizes)[0]

# plot_results(image, results['scores'], results['labels'], results['boxes'])

results

获取列图像:

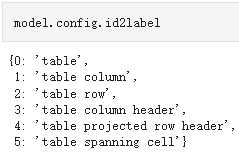

columns_box_list = [results['boxes'][i].tolist() for i in range(len(results['boxes'])) if results['labels'][i].item()==1]

columns_1 = image.crop(columns_box_list[0])

columns_1.save('columns_1.jpg') #保存

可视化:

import matplotlib.pyplot as plt

# colors for visualization

COLORS = [[0.000, 0.447, 0.741], [0.850, 0.325, 0.098], [0.929, 0.694, 0.125],

[0.494, 0.184, 0.556], [0.466, 0.674, 0.188], [0.301, 0.745, 0.933]]

def plot_results(pil_img, scores, labels, boxes):

plt.figure(figsize=(16, 10))

plt.imshow(pil_img)

ax = plt.gca()

colors = COLORS * 100

for score, label, (xmin, ymin, xmax, ymax), c in zip(scores.tolist(), labels.tolist(), boxes.tolist(), colors):

ax.add_patch(plt.Rectangle((xmin, ymin), xmax - xmin, ymax - ymin,

fill=False, color=c, linewidth=3))

text = f'{model.config.id2label[label]}: {score:0.2f}'

ax.text(xmin, ymin, text, fontsize=15,

bbox=dict(facecolor='yellow', alpha=0.5))

plt.axis('off')

plt.show()

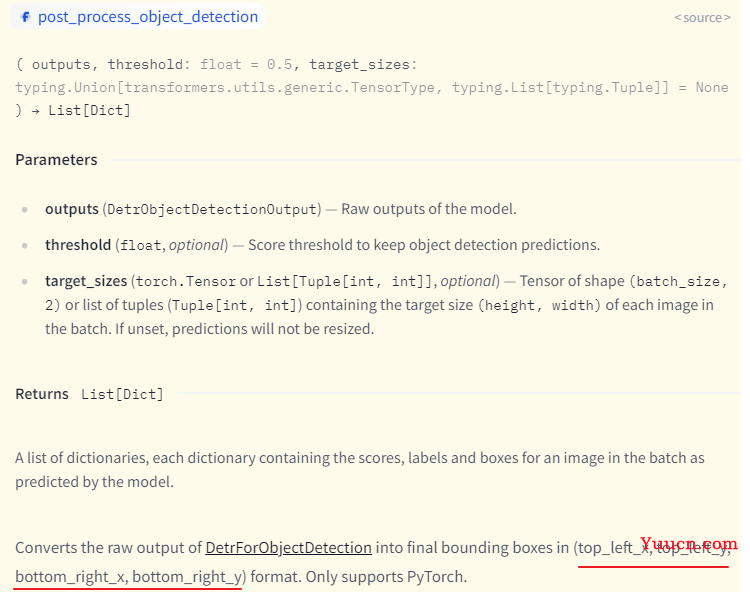

post_process_object_detection方法:

OpenCV PIL图像格式互转

参考:https://blog.csdn.net/dcrmg/article/details/78147219

PIL–》OpenCV

cv2.cvtColor(numpy.asarray(image),cv2.COLOR_RGB2BGR)

import cv2

from PIL import Image

import numpy

image = Image.open("plane.jpg")

image.show()

img = cv2.cvtColor(numpy.asarray(image),cv2.COLOR_RGB2BGR)

cv2.imshow("OpenCV",img)

cv2.waitKey()

OpenCV --》 PIL

Image.fromarray(cv2.cvtColor(img,cv2.COLOR_BGR2RGB))

import cv2

from PIL import Image

import numpy

img = cv2.imread("plane.jpg")

cv2.imshow("OpenCV",img)

image = Image.fromarray(cv2.cvtColor(img,cv2.COLOR_BGR2RGB))

image.show()

cv2.waitKey()

综上,模型检测列代码如下

# 检测模型

import cv2

from huggingface_hub import hf_hub_download

from transformers import AutoImageProcessor, TableTransformerForObjectDetection

import torch

from PIL import Image

import torch

from PIL import Image

from transformers import DetrFeatureExtractor

from transformers import AutoImageProcessor, TableTransformerForObjectDetection

from huggingface_hub import hf_hub_download

import numpy as np

import matplotlib.pyplot as plt

import cv2

def dectect_table(file_path):

# file_path = hf_hub_download(repo_id="nielsr/example-pdf", repo_type="dataset", filename="example_pdf.png")

image = Image.open(file_path).convert("RGB")

# transformers.AutoImageProcessor 是一个通用图像处理器

image_processor = AutoImageProcessor.from_pretrained("microsoft/table-transformer-detection")

model = TableTransformerForObjectDetection.from_pretrained("microsoft/table-transformer-detection")

inputs = image_processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

target_sizes = torch.tensor([image.size[::-1]])

results = image_processor.post_process_object_detection(outputs, threshold=0.9, target_sizes=target_sizes)[

0

]

box_list = []

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

box_list.append(box)

region = image.crop(box) #检测

# region.save('xxx.jpg') #保存

return region

#

def plot_results(pil_img, scores, labels, boxes):

# colors for visualization

COLORS = [[0.000, 0.447, 0.741], [0.850, 0.325, 0.098], [0.929, 0.694, 0.125],

[0.494, 0.184, 0.556], [0.466, 0.674, 0.188], [0.301, 0.745, 0.933]]

plt.figure(figsize=(16, 10))

plt.imshow(pil_img)

ax = plt.gca()

colors = COLORS * 100

for score, label, (xmin, ymin, xmax, ymax), c in zip(scores.tolist(), labels.tolist(), boxes.tolist(), colors):

if label == 1:

ax.add_patch(plt.Rectangle((xmin, ymin), xmax - xmin, ymax - ymin,

fill=False, color=c, linewidth=3))

# text = f'{model.config.id2label[label]}: {score:0.2f}'

text = f'{score:0.2f}'

ax.text(xmin, ymin, text, fontsize=15,

bbox=dict(facecolor='yellow', alpha=0.5))

plt.axis('off')

plt.show()

def cv_show(img):

'''

展示图片

@param img:

@param name:

@return:

'''

cv2.namedWindow('name', cv2.WINDOW_KEEPRATIO) # cv2.WINDOW_NORMAL | cv2.WINDOW_KEEPRATIO

cv2.imshow('name', img)

cv2.waitKey(0)

cv2.destroyAllWindows()

def dect_col(file_path):

'''

识别列

:param file_path:

:return:

'''

# example_table= region

# width, height = image.size

# image.resize((int(width * 0.5), int(height * 0.5)))

table = dectect_table(file_path)

# 截取左半边

feature_extractor = DetrFeatureExtractor()

# file_path = hf_hub_download(repo_id="nielsr/example-pdf", repo_type="dataset", filename="example_table.png")

# image = Image.open(file_path).convert("RGB")

# image = cv2.imread(file_path)

left_table = table.crop((0, 0, table.size[0]//2,table.size[1]))

encoding = feature_extractor(left_table, return_tensors="pt")

model = TableTransformerForObjectDetection.from_pretrained("microsoft/table-transformer-structure-recognition")

with torch.no_grad():

outputs = model(**encoding)

target_sizes = [left_table.size[::-1]]

results = feature_extractor.post_process_object_detection(outputs, threshold=0.6, target_sizes=target_sizes)[0]

plot_results(left_table, results['scores'], results['labels'], results['boxes'])

# columns_box_list = [results['boxes'][i].tolist() for i in range(len(results['boxes'])) if results['labels'][i].item()==1]

# columns_box_list.sort()

# columns_1 = left_table.crop(columns_box_list[0]) # left, upper, right, lower

# columns_1.save('columns_1.jpg') #保存

return columns_box_list

dect_col(r'xxxx.jpg')