代码解析

参考资料

- 建议大家在阅读前有一定

Transformer模型基础,可以先看看Transformer论文,论文下载链接

- 阅读

Informer时序模型论文,重点关注作者针对Transformer模型做了哪些改进,论文下载链接

-

Informer时序模型Github地址,数据没有包含在项目中,需要自行下载,这里提供下载地址 (包含代码文件和数据)

参数设定模块(main_informer)

- 值得注意的是

'--model'、'--data'参数需要去掉required参数,否则运行代码可能会报'--model'、'--data'错误

- 修改完参数后运行该模块,保证代码运行不报错的情况下进行debug

参数含义

parser.add_argument('--model', type=str, default='informer',help='model of experiment, options: [informer, informerstack, informerlight(TBD)]')

parser.add_argument('--data', type=str, default='WTH', help='data')

parser.add_argument('--root_path', type=str, default='./data/', help='root path of the data file')

parser.add_argument('--data_path', type=str, default='WTH.csv', help='data file')

parser.add_argument('--features', type=str, default='M', help='forecasting task, options:[M, S, MS]; M:multivariate predict multivariate, S:univariate predict univariate, MS:multivariate predict univariate')

parser.add_argument('--target', type=str, default='OT', help='target feature in S or MS task')

parser.add_argument('--freq', type=str, default='h', help='freq for time features encoding, options:[s:secondly, t:minutely, h:hourly, d:daily, b:business days, w:weekly, m:monthly], you can also use more detailed freq like 15min or 3h')

parser.add_argument('--checkpoints', type=str, default='./checkpoints/', help='location of model checkpoints')

parser.add_argument('--seq_len', type=int, default=96, help='input sequence length of Informer encoder')

parser.add_argument('--label_len', type=int, default=48, help='start token length of Informer decoder')

parser.add_argument('--pred_len', type=int, default=24, help='prediction sequence length')

parser.add_argument('--enc_in', type=int, default=7, help='encoder input size')

parser.add_argument('--dec_in', type=int, default=7, help='decoder input size')

parser.add_argument('--c_out', type=int, default=7, help='output size')

parser.add_argument('--d_model', type=int, default=512, help='dimension of model')

parser.add_argument('--n_heads', type=int, default=8, help='num of heads')

parser.add_argument('--e_layers', type=int, default=2, help='num of encoder layers')

parser.add_argument('--d_layers', type=int, default=1, help='num of decoder layers')

parser.add_argument('--s_layers', type=str, default='3,2,1', help='num of stack encoder layers')

parser.add_argument('--d_ff', type=int, default=2048, help='dimension of fcn')

parser.add_argument('--factor', type=int, default=5, help='probsparse attn factor')

parser.add_argument('--padding', type=int, default=0, help='padding type')

parser.add_argument('--distil', action='store_false', help='whether to use distilling in encoder, using this argument means not using distilling', default=True)

parser.add_argument('--dropout', type=float, default=0.05, help='dropout')

parser.add_argument('--attn', type=str, default='prob', help='attention used in encoder, options:[prob, full]')

parser.add_argument('--embed', type=str, default='timeF', help='time features encoding, options:[timeF, fixed, learned]')

parser.add_argument('--activation', type=str, default='gelu',help='activation')

parser.add_argument('--output_attention', action='store_true', help='whether to output attention in ecoder')

parser.add_argument('--do_predict', action='store_true', help='whether to predict unseen future data')

parser.add_argument('--mix', action='store_false', help='use mix attention in generative decoder', default=True)

parser.add_argument('--cols', type=str, nargs='+', help='certain cols from the data files as the input features')

parser.add_argument('--num_workers', type=int, default=0, help='data loader num workers')

parser.add_argument('--itr', type=int, default=2, help='experiments times')

parser.add_argument('--train_epochs', type=int, default=6, help='train epochs')

parser.add_argument('--batch_size', type=int, default=32, help='batch size of train input data')

parser.add_argument('--patience', type=int, default=3, help='early stopping patience')

parser.add_argument('--learning_rate', type=float, default=0.0001, help='optimizer learning rate')

parser.add_argument('--des', type=str, default='test',help='exp description')

parser.add_argument('--loss', type=str, default='mse',help='loss function')

parser.add_argument('--lradj', type=str, default='type1',help='adjust learning rate')

parser.add_argument('--use_amp', action='store_true', help='use automatic mixed precision training', default=False)

parser.add_argument('--inverse', action='store_true', help='inverse output data', default=False)

parser.add_argument('--use_gpu', type=bool, default=True, help='use gpu')

parser.add_argument('--gpu', type=int, default=0, help='gpu')

parser.add_argument('--use_multi_gpu', action='store_true', help='use multiple gpus', default=False)

parser.add_argument('--devices', type=str, default='0,1,2,3',help='device ids of multile gpus')

args = parser.parse_args()

args.use_gpu = True if torch.cuda.is_available() and args.use_gpu else False

数据文件参数

- 因为用的是笔记本电脑,这里只能用最小的数据集进行试验,也就是下面的

WTH数据集

data_parser = {

'ETTh1':{'data':'ETTh1.csv','T':'OT','M':[7,7,7],'S':[1,1,1],'MS':[7,7,1]},

'ETTh2':{'data':'ETTh2.csv','T':'OT','M':[7,7,7],'S':[1,1,1],'MS':[7,7,1]},

'ETTm1':{'data':'ETTm1.csv','T':'OT','M':[7,7,7],'S':[1,1,1],'MS':[7,7,1]},

'ETTm2':{'data':'ETTm2.csv','T':'OT','M':[7,7,7],'S':[1,1,1],'MS':[7,7,1]},

'WTH':{'data':'WTH.csv','T':'WetBulbCelsius','M':[12,12,12],'S':[1,1,1],'MS':[12,12,1]},

'ECL':{'data':'ECL.csv','T':'MT_320','M':[321,321,321],'S':[1,1,1],'MS':[321,321,1]},

'Solar':{'data':'solar_AL.csv','T':'POWER_136','M':[137,137,137],'S':[1,1,1],'MS':[137,137,1]},

}

数据处理模块(data_loader)

- 从

main_informer.py文件中exp.train(setting),train方法进入exp_informer.py文件,在_get_data中找到WTH数据处理方法

data_dict = {

'ETTh1':Dataset_ETT_hour,

'ETTh2':Dataset_ETT_hour,

'ETTm1':Dataset_ETT_minute,

'ETTm2':Dataset_ETT_minute,

'WTH':Dataset_Custom,

'ECL':Dataset_Custom,

'Solar':Dataset_Custom,

'custom':Dataset_Custom,}

- 可以看到

WTH数据处理方法为Dataset_Custom,我们进入data_loader.py文件,找到Dataset_Custom类

-

__init__主要用于传各类参数,这里不过多赘述,主要对__read_data__进行说明

def __read_data__(self):

self.scaler = StandardScaler()

df_raw = pd.read_csv(os.path.join(self.root_path,

self.data_path))

if self.cols:

cols=self.cols.copy()

cols.remove(self.target)

else:

cols = list(df_raw.columns); cols.remove(self.target); cols.remove('date')

df_raw = df_raw[['date']+cols+[self.target]]

num_train = int(len(df_raw)*0.7)

num_test = int(len(df_raw)*0.2)

num_vali = len(df_raw) - num_train - num_test

border1s = [0, num_train-self.seq_len, len(df_raw)-num_test-self.seq_len]

border2s = [num_train, num_train+num_vali, len(df_raw)]

border1 = border1s[self.set_type]

border2 = border2s[self.set_type]

if self.features=='M' or self.features=='MS':

cols_data = df_raw.columns[1:]

df_data = df_raw[cols_data]

elif self.features=='S':

df_data = df_raw[[self.target]]

if self.scale:

train_data = df_data[border1s[0]:border2s[0]]

self.scaler.fit(train_data.values)

data = self.scaler.transform(df_data.values)

else:

data = df_data.values

df_stamp = df_raw[['date']][border1:border2]

df_stamp['date'] = pd.to_datetime(df_stamp.date)

data_stamp = time_features(df_stamp, timeenc=self.timeenc, freq=self.freq)

self.data_x = data[border1:border2]

if self.inverse:

self.data_y = df_data.values[border1:border2]

else:

self.data_y = data[border1:border2]

self.data_stamp = data_stamp

- 需要注意的是

time_features函数,用来提取日期特征,比如't':['month','day','weekday','hour','minute'],表示提取月,天,周,小时,分钟。可以打开timefeatures.py

文件进行查阅

- 同样的,对

__getitem__进行说明

def __getitem__(self, index):

s_begin = index

s_end = s_begin + self.seq_len

r_begin = s_end - self.label_len

r_end = r_begin + self.label_len + self.pred_len

seq_x = self.data_x[s_begin:s_end]

if self.inverse:

seq_y = np.concatenate([self.data_x[r_begin:r_begin+self.label_len], self.data_y[r_begin+self.label_len:r_end]], 0)

else:

seq_y = self.data_y[r_begin:r_end]

seq_x_mark = self.data_stamp[s_begin:s_end]

seq_y_mark = self.data_stamp[r_begin:r_end]

return seq_x, seq_y, seq_x_mark, seq_y_mark

def __len__(self):

return len(self.data_x) - self.seq_len- self.pred_len + 1

def inverse_transform(self, data):

return self.scaler.inverse_transform(data)

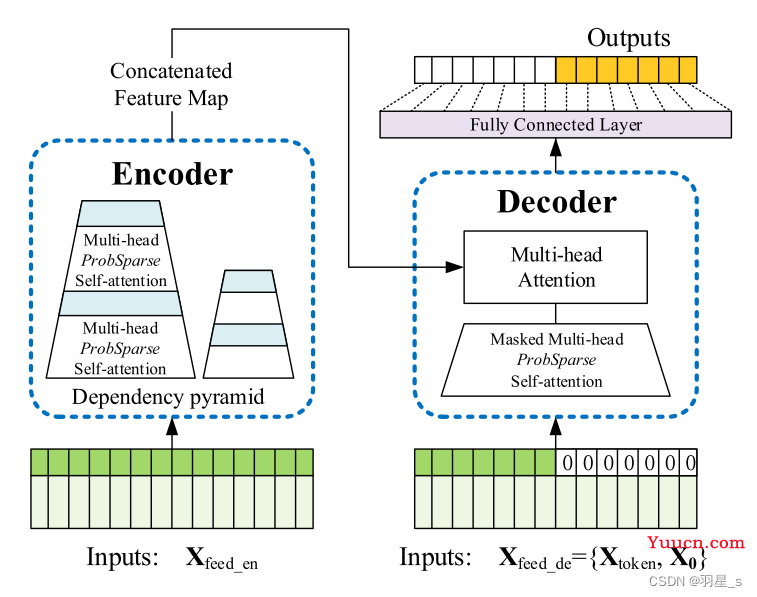

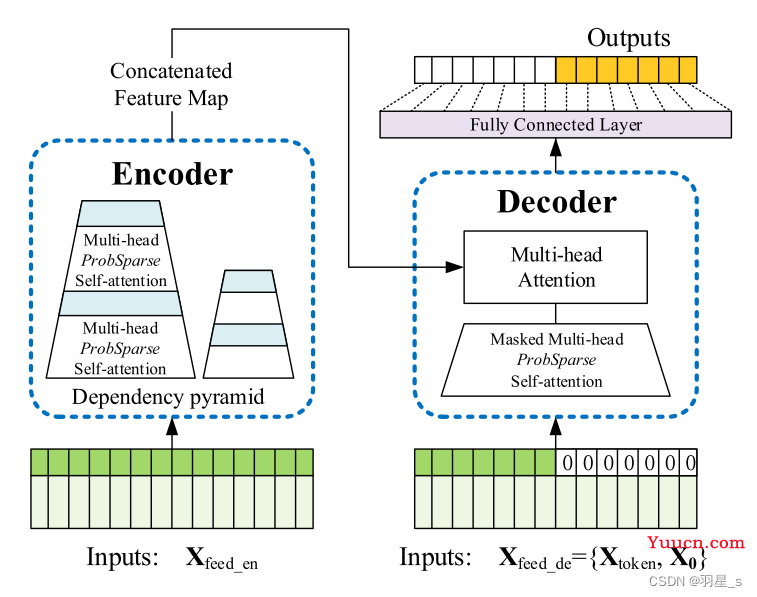

Informer模型架构(model)

- 这里贴上Informer模型论文中的结构图,方便大家对照理解。

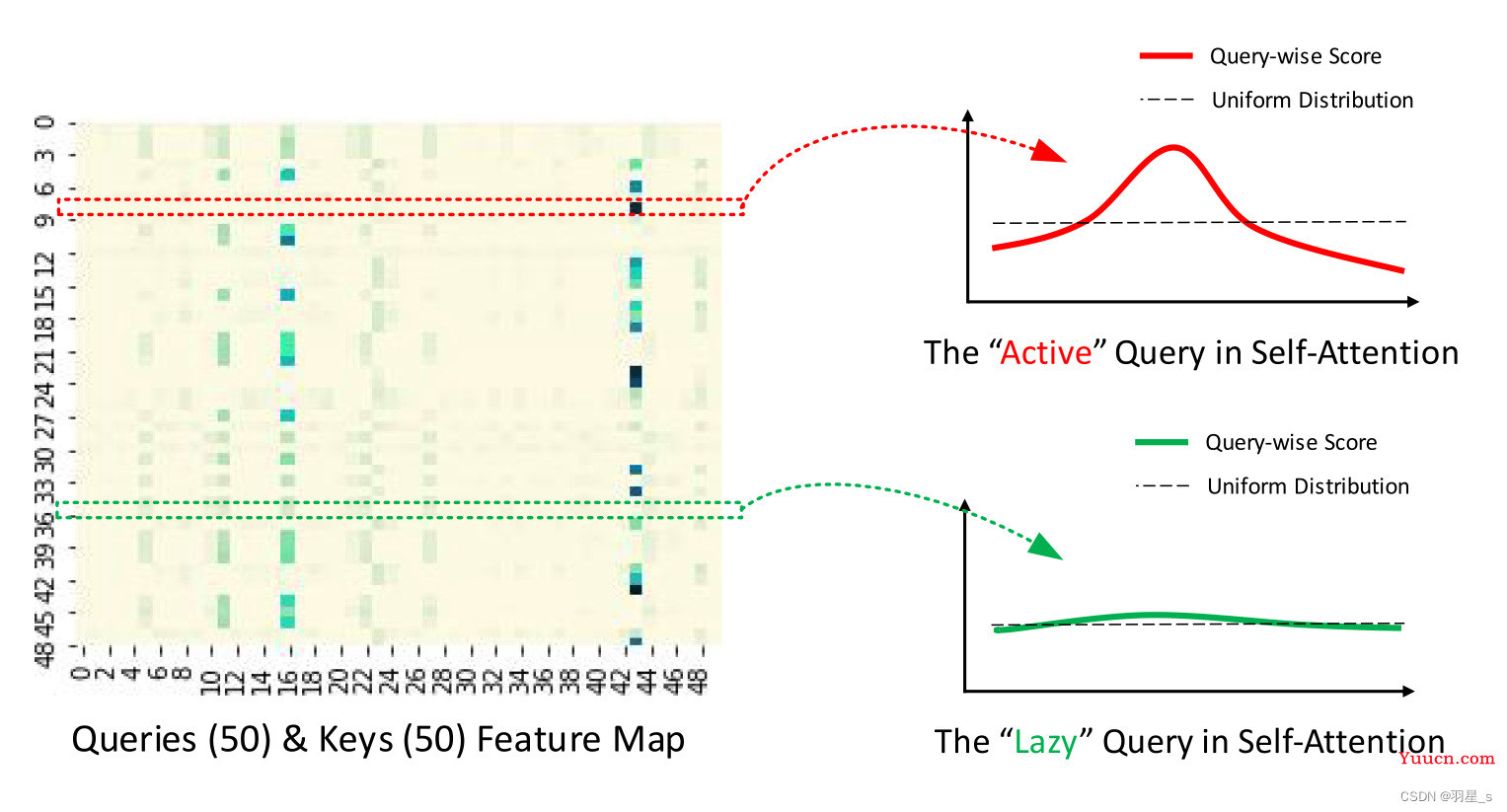

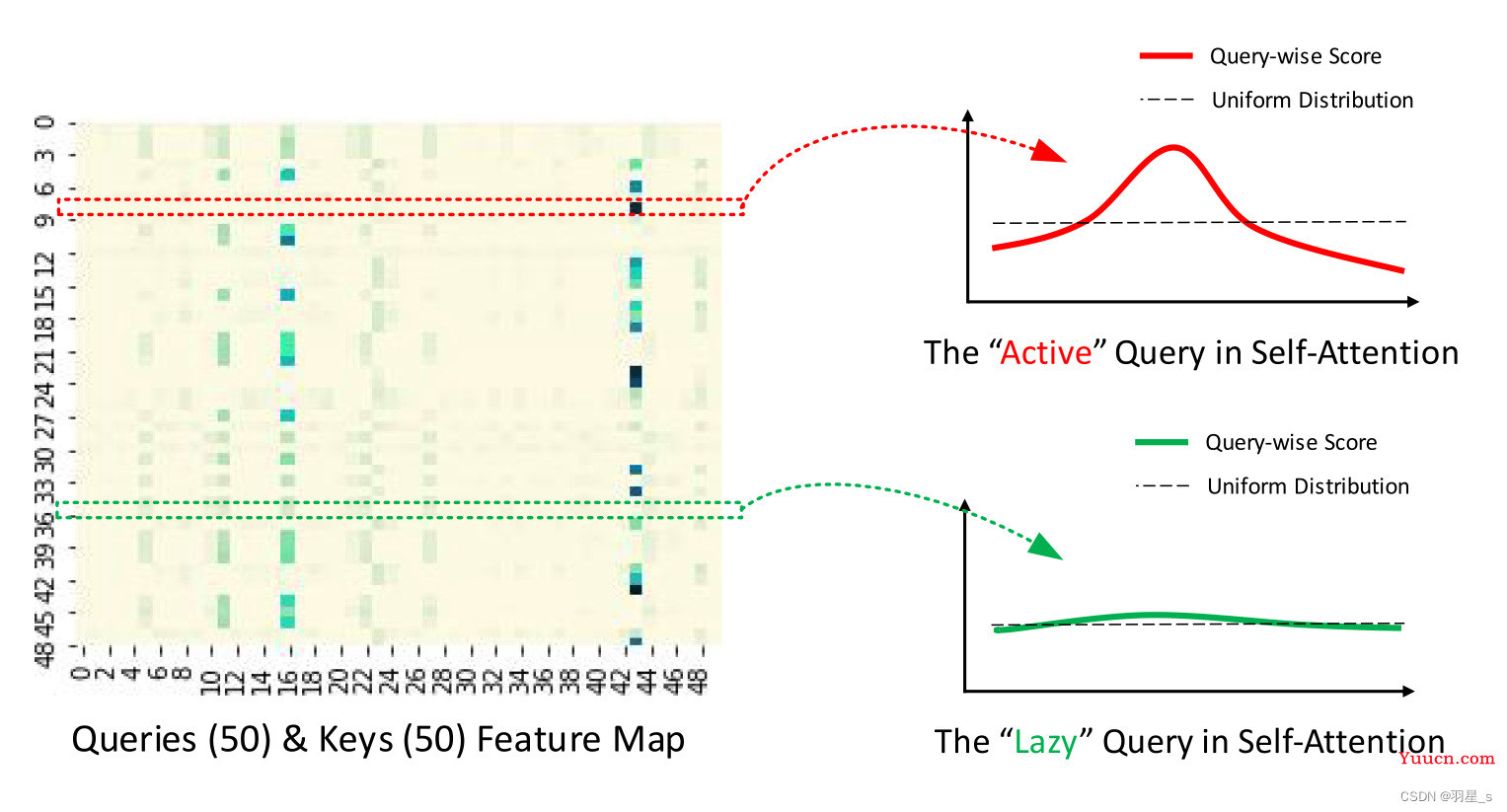

- K值选取原因与筛选方法

- 先进入

exp_informer.py文件,train函数中包含有网络架构函数。

def train(self, setting):

train_data, train_loader = self._get_data(flag = 'train')

vali_data, vali_loader = self._get_data(flag = 'val')

test_data, test_loader = self._get_data(flag = 'test')

path = os.path.join(self.args.checkpoints, setting)

if not os.path.exists(path):

os.makedirs(path)

time_now = time.time()

train_steps = len(train_loader)

early_stopping = EarlyStopping(patience=self.args.patience, verbose=True)

model_optim = self._select_optimizer()

criterion = self._select_criterion()

if self.args.use_amp:

scaler = torch.cuda.amp.GradScaler()

for epoch in range(self.args.train_epochs):

iter_count = 0

train_loss = []

self.model.train()

epoch_time = time.time()

for i, (batch_x,batch_y,batch_x_mark,batch_y_mark) in enumerate(train_loader):

iter_count += 1

model_optim.zero_grad()

pred, true = self._process_one_batch(

train_data, batch_x, batch_y, batch_x_mark, batch_y_mark)

loss = criterion(pred, true)

train_loss.append(loss.item())

if (i+1) % 100==0:

print("\titers: {0}, epoch: {1} | loss: {2:.7f}".format(i + 1, epoch + 1, loss.item()))

speed = (time.time()-time_now)/iter_count

left_time = speed*((self.args.train_epochs - epoch)*train_steps - i)

print('\tspeed: {:.4f}s/iter; left time: {:.4f}s'.format(speed, left_time))

iter_count = 0

time_now = time.time()

if self.args.use_amp:

scaler.scale(loss).backward()

scaler.step(model_optim)

scaler.update()

else:

loss.backward()

model_optim.step()

print("Epoch: {} cost time: {}".format(epoch+1, time.time()-epoch_time))

train_loss = np.average(train_loss)

vali_loss = self.vali(vali_data, vali_loader, criterion)

test_loss = self.vali(test_data, test_loader, criterion)

print("Epoch: {0}, Steps: {1} | Train Loss: {2:.7f} Vali Loss: {3:.7f} Test Loss: {4:.7f}".format(

epoch + 1, train_steps, train_loss, vali_loss, test_loss))

early_stopping(vali_loss, self.model, path)

if early_stopping.early_stop:

print("Early stopping")

break

adjust_learning_rate(model_optim, epoch+1, self.args)

best_model_path = path+'/'+'checkpoint.pth'

self.model.load_state_dict(torch.load(best_model_path))

return self.model

- 注意模型训练那一块

_process_one_batch,进入该方法

def _process_one_batch(self, dataset_object, batch_x, batch_y, batch_x_mark, batch_y_mark):

batch_x = batch_x.float().to(self.device)

batch_y = batch_y.float()

batch_x_mark = batch_x_mark.float().to(self.device)

batch_y_mark = batch_y_mark.float().to(self.device)

if self.args.padding==0:

dec_inp = torch.zeros([batch_y.shape[0], self.args.pred_len, batch_y.shape[-1]]).float()

elif self.args.padding==1:

dec_inp = torch.ones([batch_y.shape[0], self.args.pred_len, batch_y.shape[-1]]).float()

dec_inp = torch.cat([batch_y[:,:self.args.label_len,:], dec_inp], dim=1).float().to(self.device)

if self.args.use_amp:

with torch.cuda.amp.autocast():

if self.args.output_attention:

outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)[0]

else:

outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)

else:

if self.args.output_attention:

outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)[0]

else:

outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark)

if self.args.inverse:

outputs = dataset_object.inverse_transform(outputs)

f_dim = -1 if self.args.features=='MS' else 0

batch_y = batch_y[:,-self.args.pred_len:,f_dim:].to(self.device)

return outputs, batch_y

- 可以看到

outputs = self.model(batch_x, batch_x_mark, dec_inp, batch_y_mark),model中包含Informer的核心架构(也是最重要的部分)

- 打开

model.py文件,找到Informer类,直接看forward

def forward(self, x_enc, x_mark_enc, x_dec, x_mark_dec,

enc_self_mask=None, dec_self_mask=None, dec_enc_mask=None):

enc_out = self.enc_embedding(x_enc, x_mark_enc)

enc_out, attns = self.encoder(enc_out, attn_mask=enc_self_mask)

dec_out = self.dec_embedding(x_dec, x_mark_dec)

dec_out = self.decoder(dec_out, enc_out, x_mask=dec_self_mask, cross_mask=dec_enc_mask)

dec_out = self.projection(dec_out)

if self.output_attention:

return dec_out[:,-self.pred_len:,:], attns

else:

return dec_out[:,-self.pred_len:,:]

编码器Embedding操作

class DataEmbedding(nn.Module):

def __init__(self, c_in, d_model, embed_type='fixed', freq='h', dropout=0.1):

super(DataEmbedding, self).__init__()

self.value_embedding = TokenEmbedding(c_in=c_in, d_model=d_model)

self.position_embedding = PositionalEmbedding(d_model=d_model)

self.temporal_embedding = TemporalEmbedding(d_model=d_model, embed_type=embed_type, freq=freq) if embed_type!='timeF' else TimeFeatureEmbedding(d_model=d_model, embed_type=embed_type, freq=freq)

self.dropout = nn.Dropout(p=dropout)

def forward(self, x, x_mark):

x = self.value_embedding(x) + self.position_embedding(x) + self.temporal_embedding(x_mark)

return self.dropout(x)

Encoder模块

class Encoder(nn.Module):

def __init__(self, attn_layers, conv_layers=None, norm_layer=None):

super(Encoder, self).__init__()

self.attn_layers = nn.ModuleList(attn_layers)

self.conv_layers = nn.ModuleList(conv_layers) if conv_layers is not None else None

self.norm = norm_layer

def forward(self, x, attn_mask=None):

attns = []

if self.conv_layers is not None:

for attn_layer, conv_layer in zip(self.attn_layers, self.conv_layers):

x, attn = attn_layer(x, attn_mask=attn_mask)

x = conv_layer(x)

attns.append(attn)

x, attn = self.attn_layers[-1](x, attn_mask=attn_mask)

attns.append(attn)

else:

for attn_layer in self.attn_layers:

x, attn = attn_layer(x, attn_mask=attn_mask)

attns.append(attn)

if self.norm is not None:

x = self.norm(x)

return x, attns

- 进入

EncoderLayer类,找到注意力计算架构

class EncoderLayer(nn.Module):

def __init__(self, attention, d_model, d_ff=None, dropout=0.1, activation="relu"):

super(EncoderLayer, self).__init__()

d_ff = d_ff or 4*d_model

self.attention = attention

self.conv1 = nn.Conv1d(in_channels=d_model, out_channels=d_ff, kernel_size=1)

self.conv2 = nn.Conv1d(in_channels=d_ff, out_channels=d_model, kernel_size=1)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.dropout = nn.Dropout(dropout)

self.activation = F.relu if activation == "relu" else F.gelu

def forward(self, x, attn_mask=None):

new_x, attn = self.attention(

x, x, x,

attn_mask = attn_mask

)

x = x + self.dropout(new_x)

y = x = self.norm1(x)

y = self.dropout(self.activation(self.conv1(y.transpose(-1,1))))

y = self.dropout(self.conv2(y).transpose(-1,1))

return self.norm2(x+y), attn

- 注意代码中的

new_x, attn = self.attention(x, x, x,attn_mask = attn_mask)

注意力层

- 注意力层在

attn.py文件中,找到AttentionLayer类

class AttentionLayer(nn.Module):

def __init__(self, attention, d_model, n_heads,

d_keys=None, d_values=None, mix=False):

super(AttentionLayer, self).__init__()

d_keys = d_keys or (d_model//n_heads)

d_values = d_values or (d_model//n_heads)

self.inner_attention = attention

self.query_projection = nn.Linear(d_model, d_keys * n_heads)

self.key_projection = nn.Linear(d_model, d_keys * n_heads)

self.value_projection = nn.Linear(d_model, d_values * n_heads)

self.out_projection = nn.Linear(d_values * n_heads, d_model)

self.n_heads = n_heads

self.mix = mix

def forward(self, queries, keys, values, attn_mask):

B, L, _ = queries.shape

_, S, _ = keys.shape

H = self.n_heads

queries = self.query_projection(queries).view(B, L, H, -1)

keys = self.key_projection(keys).view(B, S, H, -1)

values = self.value_projection(values).view(B, S, H, -1)

out, attn = self.inner_attention(

queries,

keys,

values,

attn_mask

)

if self.mix:

out = out.transpose(2,1).contiguous()

out = out.view(B, L, -1)

return self.out_projection(out), attn

- 注意代码中

self.inner_attention,跳转到ProbAttention类

- 其中

_prob_QK用于选取Q、K是非常模型核心,要认真读,贴一下公式:

M

‾

(

q

i

,

k

)

=

m

a

x

j

{

q

i

k

j

T

d

}

−

1

L

k

∑

j

=

1

L

k

q

i

k

j

T

d

\overline{M}_{(q_i,k)} = \mathop{max} \limits_{j} \{\frac{q_ik_j^{T}}{\sqrt{d}}\}-\frac{1}{L_{k}}\sum^{L_k}_{j=1}\frac{q_ik_j^{T}}{\sqrt{d}}

M(qi,k)=jmax{dqikjT}−Lk1j=1∑LkdqikjT

-

_get_initial_context计算初始V值,_update_context更新重要Q的V值

class ProbAttention(nn.Module):

def __init__(self, mask_flag=True, factor=5, scale=None, attention_dropout=0.1, output_attention=False):

super(ProbAttention, self).__init__()

self.factor = factor

self.scale = scale

self.mask_flag = mask_flag

self.output_attention = output_attention

self.dropout = nn.Dropout(attention_dropout)

def _prob_QK(self, Q, K, sample_k, n_top):

B, H, L_K, E = K.shape

_, _, L_Q, _ = Q.shape

K_expand = K.unsqueeze(-3).expand(B, H, L_Q, L_K, E)

index_sample = torch.randint(L_K, (L_Q, sample_k))

K_sample = K_expand[:, :, torch.arange(L_Q).unsqueeze(1), index_sample, :]

Q_K_sample = torch.matmul(Q.unsqueeze(-2), K_sample.transpose(-2, -1)).squeeze(-2)

M = Q_K_sample.max(-1)[0] - torch.div(Q_K_sample.sum(-1), L_K)

M_top = M.topk(n_top, sorted=False)[1]

Q_reduce = Q[torch.arange(B)[:, None, None],

torch.arange(H)[None, :, None],

M_top, :]

Q_K = torch.matmul(Q_reduce, K.transpose(-2, -1))

return Q_K, M_top

def _get_initial_context(self, V, L_Q):

B, H, L_V, D = V.shape

if not self.mask_flag:

V_sum = V.mean(dim=-2)

contex = V_sum.unsqueeze(-2).expand(B, H, L_Q, V_sum.shape[-1]).clone()

else:

assert(L_Q == L_V)

contex = V.cumsum(dim=-2)

return contex

def _update_context(self, context_in, V, scores, index, L_Q, attn_mask):

B, H, L_V, D = V.shape

if self.mask_flag:

attn_mask = ProbMask(B, H, L_Q, index, scores, device=V.device)

scores.masked_fill_(attn_mask.mask, -np.inf)

attn = torch.softmax(scores, dim=-1)

context_in[torch.arange(B)[:, None, None],

torch.arange(H)[None, :, None],

index, :] = torch.matmul(attn, V).type_as(context_in)

if self.output_attention:

attns = (torch.ones([B, H, L_V, L_V])/L_V).type_as(attn).to(attn.device)

attns[torch.arange(B)[:, None, None], torch.arange(H)[None, :, None], index, :] = attn

return (context_in, attns)

else:

return (context_in, None)

def forward(self, queries, keys, values, attn_mask):

B, L_Q, H, D = queries.shape

_, L_K, _, _ = keys.shape

queries = queries.transpose(2,1)

keys = keys.transpose(2,1)

values = values.transpose(2,1)

U_part = self.factor * np.ceil(np.log(L_K)).astype('int').item()

u = self.factor * np.ceil(np.log(L_Q)).astype('int').item()

U_part = U_part if U_part<L_K else L_K

u = u if u<L_Q else L_Q

scores_top, index = self._prob_QK(queries, keys, sample_k=U_part, n_top=u)

scale = self.scale or 1./sqrt(D)

if scale is not None:

scores_top = scores_top * scale

context = self._get_initial_context(values, L_Q)

context, attn = self._update_context(context, values, scores_top, index, L_Q, attn_mask)

return context.transpose(2,1).contiguous(), attn

解码器Embedding操作

- 解码器的Embedding操作与编码器Embedding操作完全一致,只不过需要注意传入数组维度

x_dec维度[batch,有标签+无标签序列长度,特征列](32,72=48+24,12)

Decoder模块

class Decoder(nn.Module):

def __init__(self, layers, norm_layer=None):

super(Decoder, self).__init__()

self.layers = nn.ModuleList(layers)

self.norm = norm_layer

def forward(self, x, cross, x_mask=None, cross_mask=None):

for layer in self.layers:

x = layer(x, cross, x_mask=x_mask, cross_mask=cross_mask)

if self.norm is not None:

x = self.norm(x)

return x

- 代码中的

layer层定义在该文件中,找到DecoderLayer类

class DecoderLayer(nn.Module):

def __init__(self, self_attention, cross_attention, d_model, d_ff=None,

dropout=0.1, activation="relu"):

super(DecoderLayer, self).__init__()

d_ff = d_ff or 4*d_model

self.self_attention = self_attention

self.cross_attention = cross_attention

self.conv1 = nn.Conv1d(in_channels=d_model, out_channels=d_ff, kernel_size=1)

self.conv2 = nn.Conv1d(in_channels=d_ff, out_channels=d_model, kernel_size=1)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.norm3 = nn.LayerNorm(d_model)

self.dropout = nn.Dropout(dropout)

self.activation = F.relu if activation == "relu" else F.gelu

def forward(self, x, cross, x_mask=None, cross_mask=None):

x = x + self.dropout(self.self_attention(

x, x, x,

attn_mask=x_mask

)[0])

x = self.norm1(x)

x = x + self.dropout(self.cross_attention(

x, cross, cross,

attn_mask=cross_mask

)[0])

y = x = self.norm2(x)

y = self.dropout(self.activation(self.conv1(y.transpose(-1,1))))

y = self.dropout(self.conv2(y).transpose(-1,1))

return self.norm3(x+y)

- 这里需要注意,在

Decoder板块中有两个和Encoder不一样的操作,即self-attention和corss-attention。

-

self-attention是自注意力机制,比如在本例中带标签长度+预测长度为72,那么会在72个Q与72个K中进行与在Decoder中同样的筛选、更新操作

-

cross-attention是交叉注意力机制,选值分别为Decoder中的Q,Encoder中的K,Encoder中的V进行与在Decoder中同样的筛选、更新操作

- 到这里

model.py中的模型板块结束,回到exp_informer.py文件中的_process_one_batch,通过output变量得到预测值

- 回到

exp_informer.py文件中的train函数,得到预测值与真实值,继续接下来的梯度、学习率更新,计算损失函数

结果展示

- 我用自己笔记本电脑跑的,因为没有GPU,所以耗费大概7小时(注:模型文件我放在上面的下载链接中了,包括带注释的代码文件)

train 24425

val 3485

test 6989

iters: 100, epoch: 1 | loss: 0.4753647

speed: 5.8926s/iter; left time: 26393.0550s

iters: 200, epoch: 1 | loss: 0.3887450

speed: 5.6093s/iter; left time: 24563.0934s

iters: 300, epoch: 1 | loss: 0.3397639

speed: 5.6881s/iter; left time: 24339.4008s

iters: 400, epoch: 1 | loss: 0.3773919

speed: 5.5947s/iter; left time: 23380.1260s

iters: 500, epoch: 1 | loss: 0.3424160

speed: 5.8912s/iter; left time: 24030.1962s

iters: 600, epoch: 1 | loss: 0.3589063

speed: 6.0372s/iter; left time: 24021.9204s

iters: 700, epoch: 1 | loss: 0.3522923

speed: 5.2896s/iter; left time: 20518.3927s

Epoch: 1 cost time: 4319.718204259872

Epoch: 1, Steps: 763 | Train Loss: 0.3825711 Vali Loss: 0.4002144 Test Loss: 0.3138740

Validation loss decreased (inf --> 0.400214). Saving model ...

Updating learning rate to 0.0001

iters: 100, epoch: 2 | loss: 0.3452260

speed: 12.8896s/iter; left time: 47897.7932s

iters: 200, epoch: 2 | loss: 0.2782844

speed: 4.7867s/iter; left time: 17308.6180s

iters: 300, epoch: 2 | loss: 0.2653053

speed: 4.7938s/iter; left time: 16855.0160s

iters: 400, epoch: 2 | loss: 0.3157508

speed: 4.7083s/iter; left time: 16083.5403s

iters: 500, epoch: 2 | loss: 0.3046930

speed: 4.7699s/iter; left time: 15816.8855s

iters: 600, epoch: 2 | loss: 0.2360453

speed: 4.8311s/iter; left time: 15536.9307s

iters: 700, epoch: 2 | loss: 0.2668953

speed: 4.7713s/iter; left time: 14867.4169s

Epoch: 2 cost time: 3644.3840498924255

Epoch: 2, Steps: 763 | Train Loss: 0.2945577 Vali Loss: 0.3963071 Test Loss: 0.3274192

Validation loss decreased (0.400214 --> 0.396307). Saving model ...

Updating learning rate to 5e-05

iters: 100, epoch: 3 | loss: 0.2556470

speed: 12.6569s/iter; left time: 37375.7115s

iters: 200, epoch: 3 | loss: 0.2456252

speed: 4.7655s/iter; left time: 13596.0810s

iters: 300, epoch: 3 | loss: 0.2562804

speed: 4.7336s/iter; left time: 13031.4940s

iters: 400, epoch: 3 | loss: 0.2049552

speed: 4.7622s/iter; left time: 12634.1883s

iters: 500, epoch: 3 | loss: 0.2604980

speed: 4.7524s/iter; left time: 12132.7789s

iters: 600, epoch: 3 | loss: 0.2539216

speed: 4.7413s/iter; left time: 11630.3915s

iters: 700, epoch: 3 | loss: 0.2098076

speed: 4.7394s/iter; left time: 11151.7416s

Epoch: 3 cost time: 3628.159082174301

Epoch: 3, Steps: 763 | Train Loss: 0.2486252 Vali Loss: 0.4155475 Test Loss: 0.3301197

EarlyStopping counter: 1 out of 3

Updating learning rate to 2.5e-05

iters: 100, epoch: 4 | loss: 0.2175551

speed: 12.6253s/iter; left time: 27649.4546s

iters: 200, epoch: 4 | loss: 0.2459734

speed: 4.7335s/iter; left time: 9892.9213s

iters: 300, epoch: 4 | loss: 0.2354426

speed: 4.7546s/iter; left time: 9461.6300s

iters: 400, epoch: 4 | loss: 0.2267139

speed: 4.7719s/iter; left time: 9018.9749s

iters: 500, epoch: 4 | loss: 0.2379844

speed: 4.8038s/iter; left time: 8598.7446s

iters: 600, epoch: 4 | loss: 0.2434178

speed: 4.7608s/iter; left time: 8045.7994s

iters: 700, epoch: 4 | loss: 0.2231207

speed: 4.7765s/iter; left time: 7594.6586s

Epoch: 4 cost time: 3649.547614812851

Epoch: 4, Steps: 763 | Train Loss: 0.2224283 Vali Loss: 0.4230270 Test Loss: 0.3334258

EarlyStopping counter: 2 out of 3

Updating learning rate to 1.25e-05

iters: 100, epoch: 5 | loss: 0.1837259

speed: 12.7564s/iter; left time: 18203.3974s

iters: 200, epoch: 5 | loss: 0.1708880

speed: 4.7804s/iter; left time: 6343.6200s

iters: 300, epoch: 5 | loss: 0.2529005

speed: 4.7426s/iter; left time: 5819.1675s

iters: 400, epoch: 5 | loss: 0.2434390

speed: 4.7388s/iter; left time: 5340.6568s

iters: 500, epoch: 5 | loss: 0.2078404

speed: 4.7515s/iter; left time: 4879.7921s

iters: 600, epoch: 5 | loss: 0.2372987

speed: 4.7986s/iter; left time: 4448.2748s

iters: 700, epoch: 5 | loss: 0.2022571

speed: 4.7718s/iter; left time: 3946.2739s

Epoch: 5 cost time: 3636.7107157707214

Epoch: 5, Steps: 763 | Train Loss: 0.2088229 Vali Loss: 0.4305894 Test Loss: 0.3341273

EarlyStopping counter: 3 out of 3

Early stopping

>>>>>>>testing : informer_WTH_ftM_sl96_ll48_pl24_dm512_nh8_el2_dl1_df2048_atprob_fc5_ebtimeF_dtTrue_mxTrue_test_0<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

test 6989

test shape: (218, 32, 24, 12) (218, 32, 24, 12)

test shape: (6976, 24, 12) (6976, 24, 12)

mse:0.3277873396873474, mae:0.3727897107601166

Use CPU

>>>>>>>start training : informer_WTH_ftM_sl96_ll48_pl24_dm512_nh8_el2_dl1_df2048_atprob_fc5_ebtimeF_dtTrue_mxTrue_test_1>>>>>>>>>>>>>>>>>>>>>>>>>>

train 24425

val 3485

test 6989

iters: 100, epoch: 1 | loss: 0.4508476

speed: 4.7396s/iter; left time: 21228.7904s

iters: 200, epoch: 1 | loss: 0.3859568

speed: 4.7742s/iter; left time: 20906.0895s

iters: 300, epoch: 1 | loss: 0.3749838

speed: 4.7690s/iter; left time: 20406.5500s

iters: 400, epoch: 1 | loss: 0.3673764

speed: 4.8070s/iter; left time: 20088.4627s

iters: 500, epoch: 1 | loss: 0.3068828

speed: 4.7643s/iter; left time: 19433.6961s

iters: 600, epoch: 1 | loss: 0.4173551

speed: 4.7621s/iter; left time: 18948.4516s

iters: 700, epoch: 1 | loss: 0.2720438

speed: 4.7609s/iter; left time: 18467.4719s

Epoch: 1 cost time: 3639.997560977936

Epoch: 1, Steps: 763 | Train Loss: 0.3788956 Vali Loss: 0.3947107 Test Loss: 0.3116618

Validation loss decreased (inf --> 0.394711). Saving model ...

Updating learning rate to 0.0001

iters: 100, epoch: 2 | loss: 0.3547252

speed: 12.6113s/iter; left time: 46863.7093s

iters: 200, epoch: 2 | loss: 0.3236437

speed: 4.7504s/iter; left time: 17177.4475s

iters: 300, epoch: 2 | loss: 0.2898968

speed: 4.7720s/iter; left time: 16778.2666s

iters: 400, epoch: 2 | loss: 0.3107039

speed: 4.7412s/iter; left time: 16195.8892s

iters: 500, epoch: 2 | loss: 0.2816701

speed: 4.7244s/iter; left time: 15666.2476s

iters: 600, epoch: 2 | loss: 0.2226012

speed: 4.7348s/iter; left time: 15227.0618s

iters: 700, epoch: 2 | loss: 0.2239729

speed: 4.8806s/iter; left time: 15208.0025s

Epoch: 2 cost time: 3635.6160113811493

Epoch: 2, Steps: 763 | Train Loss: 0.2962583 Vali Loss: 0.4018708 Test Loss: 0.3213752

EarlyStopping counter: 1 out of 3

Updating learning rate to 5e-05

iters: 100, epoch: 3 | loss: 0.2407307

speed: 12.5584s/iter; left time: 37084.8281s

iters: 200, epoch: 3 | loss: 0.2294409

speed: 5.1105s/iter; left time: 14580.3263s

iters: 300, epoch: 3 | loss: 0.3180184

speed: 5.9484s/iter; left time: 16376.0364s

iters: 400, epoch: 3 | loss: 0.2101320

speed: 5.7987s/iter; left time: 15384.0189s

iters: 500, epoch: 3 | loss: 0.2701742

speed: 5.5463s/iter; left time: 14159.6749s

iters: 600, epoch: 3 | loss: 0.2391748

speed: 4.8338s/iter; left time: 11857.4335s

iters: 700, epoch: 3 | loss: 0.2280931

speed: 4.7718s/iter; left time: 11228.1147s

Epoch: 3 cost time: 3975.2745430469513

Epoch: 3, Steps: 763 | Train Loss: 0.2494072 Vali Loss: 0.4189631 Test Loss: 0.3308771

EarlyStopping counter: 2 out of 3

Updating learning rate to 2.5e-05

iters: 100, epoch: 4 | loss: 0.2260314

speed: 12.7037s/iter; left time: 27821.0994s

iters: 200, epoch: 4 | loss: 0.2191769

speed: 4.7906s/iter; left time: 10012.3575s

iters: 300, epoch: 4 | loss: 0.2044496

speed: 4.7498s/iter; left time: 9452.0362s

iters: 400, epoch: 4 | loss: 0.2167130

speed: 4.7545s/iter; left time: 8985.9758s

iters: 500, epoch: 4 | loss: 0.2340788

speed: 4.7329s/iter; left time: 8471.8863s

iters: 600, epoch: 4 | loss: 0.2137127

speed: 4.7037s/iter; left time: 7949.1748s

iters: 700, epoch: 4 | loss: 0.1899967

speed: 4.7049s/iter; left time: 7480.8388s

Epoch: 4 cost time: 3624.2080821990967

Epoch: 4, Steps: 763 | Train Loss: 0.2222918 Vali Loss: 0.4390603 Test Loss: 0.3350959

EarlyStopping counter: 3 out of 3

Early stopping

>>>>>>>testing : informer_WTH_ftM_sl96_ll48_pl24_dm512_nh8_el2_dl1_df2048_atprob_fc5_ebtimeF_dtTrue_mxTrue_test_1<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

test 6989

test shape: (218, 32, 24, 12) (218, 32, 24, 12)

test shape: (6976, 24, 12) (6976, 24, 12)

mse:0.3116863965988159, mae:0.36840054392814636

- 跑完以后项目文件中会生成两个文件夹,

checkpoints文件夹中存放模型文件,后缀名为.pht;results文件夹中有3个文件,pred.npy为预测值,true.npy为真实值

- 作者在GitHub上留下了关于预测的具体方法,这里因为篇幅原因就不继续写了,可以看后续Informer时序模型(自定义项目)