这里给大家分享我在网上总结出来的一些知识,希望对大家有所帮助

因公司业务需要,需要开发水印相机功能,而项目代码用的uniapp框架,App端只能简单调用系统的相机,无法自定义界面,在此基础上,只能开发自定义插件来完成功能(自定义原生插件,即是用原生代码来编写组件实现功能,然后供uniapp项目调用),经过半个月的研究和开发,完成了这款插件,以高度自由的形式提供了开发者相机自定义界面的需求,只需要在相机界面引入

<!-- 相机原生插件 START --> <camera-view ref="cameraObj" class="camera_view" :defaultCamera="currentCamera" @receiveRatio="receiveRatio" @takePhotoSuccess="takePhotoSuccess" @takePhotoFail="takePhotoFail" @recordSuccess="recordSuccess" @recordFail="recordFail" @receiveInfo="onError" :style="'width:'+previewWidth+'px;height:'+previewHeight+'px;margin-left:-'+marginLeft+'px'" > </camera-view> <!-- 相机原生插件 END -->

这里建议宽高设置为全屏,然后在界面上自定义叠加自己的按钮文字等实现自己的界面功能,然后调用插件提供的api实现物理功能

// 拍照

takePhoto(){

console.error("开始拍照")

// 设置水印

this.$refs.cameraObj.addWaterText({

"date":this.tempDateStr || "",

"logo":"·七彩云·|水印相机",

"address":(this.showAddress ? this.address:""),

"time":this.tempTimeStr || "",

"week":this.weekDay || "",

"remark":(this.showRemark ? this.remark:"")

});

// 调用拍照api

this.$refs.cameraObj.takePhoto();

},

// 切换闪光灯

switchFlash(){

if(this.flashStatus === 0){

this.flashStatus = 1;

this.$refs.cameraObj.openFlash();

}else{

this.flashStatus = 0;

this.$refs.cameraObj.closeFlash();

}

},

// 切换摄像头

switchCamera(){

if(this.currentCamera === "0"){

this.currentCamera = "1";

this.$refs.cameraObj.openFront();

}else{

this.currentCamera = "0";

this.$refs.cameraObj.openBack();

}

},

原生插件开发文档

Android / IOS 原生插件都有两种类型扩展

1、Module 扩展 非 UI 的特定功能. ( 直白点说就是只注重功能 )

2、 Component 扩展 实现特别功能的 Native 控件. ( 侧重点在界面 )

比如我们想实现一个自定义的原生按钮,那就得扩展Component,因为需要有界面,而想实现一个提供各种api的插件,比如加减乘除算法等不需要界面显示,只有结果数据的,这种就可以用Module

附上链接: 前往下载插件和demo实例

一、Android原生插件的实现

首先android类继承uniapp的特殊类UniComponent

public class LuanQingCamera extends UniComponent<FrameLayout>

在initComponentHostView这个固定方法返回一个组件

@Override

protected FrameLayout initComponentHostView(Context context) {

// 我们自定义了一个FrameLayout的组件(为了方便后面扩展水印)

FrameLayout frameLayout = new FrameLayout(context);

// 创建一个SurfaceView用来承载摄像头预览

SurfaceView surfaceView = new SurfaceView(context);

// 添加到布局中

frameLayout.addView(surfaceView);

if (mHolder == null) {

mHolder = surfaceView.getHolder();

mHolder.addCallback(new SurfaceHolder.Callback() {

@Override

public void surfaceCreated(SurfaceHolder holder) {

// 检查权限 如果权限满足就将打开摄像头,初始化预览

checkPermission();

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

}

});

}

return frameLayout;

}

申请权限,android 6.0起需一些危险权限要动态申请,因此我们在使用摄像头前申请

@UniJSMethod

public void checkPermission() {

Context mContent = mUniSDKInstance.getContext();

if(mContent instanceof Activity){

// 用于请求权限的列表

List<String> permissions = new ArrayList<>();

// 判断权限是否足够的标识变量

boolean isEnoughPermission = true;

// 权限检查和判断模块 START

List<PermissionEntity> checkList = new ArrayList<>();

checkList.add(new PermissionEntity(Manifest.permission.CAMERA,"摄像头相机权限"));

checkList.add(new PermissionEntity(Manifest.permission.RECORD_AUDIO,"录音录制权限"));

checkList.add(new PermissionEntity(Manifest.permission.WRITE_EXTERNAL_STORAGE,"文件读写权限"));

for (PermissionEntity p : checkList){

// 判断是否有权限

boolean isHas = ActivityCompat.checkSelfPermission(mUniSDKInstance.getContext(), p.getPermissionName()) == PackageManager.PERMISSION_GRANTED;

if (isHas) {

// 已经有权限(可能用户在设置中开启了)的话就把配置中的权限状态设置为已有权限

SharedData.setParam(mUniSDKInstance.getContext(),p.getPermissionName(),1);

}

// 权限状态: 0|无权限1|有权限2|已拒绝

int status = (int) SharedData.getParam(mUniSDKInstance.getContext(),p.getPermissionName(),0);

if(status == 0){

// 添加到权限请求列表

permissions.add(p.getPermissionName());

isEnoughPermission = false;

}else if(status == 2){

isEnoughPermission = false;

backData("receiveInfo", 2003 ,"缺少"+p.getDescribe());

}

}

// 如果权限足够了直接初始化相机

if(isEnoughPermission){

initCameraOption();

return;

}

// 权限检查和判断模块 START

if(permissions.size() > 0){

EsayPermissions.with((Activity) mContent).permission(permissions).request(new OnPermission() {

@Override

public void hasPermission(List<String> granted, boolean isAll) {

if(isAll){

initCameraOption();

}else{

backData("receiveInfo", 2003 ,"缺少摄像头|录制录音|文件读写权限");

}

}

@Override

public void noPermission(List<String> denied, boolean quick) {

// 把已拒绝的权限记录,下次不再弹出权限申请,因为不这样做存在会被应用市场拒绝并下架的风险

for (String permission : denied){

// 用户拒绝

SharedData.setParam(mUniSDKInstance.getContext(),permission,2);

}

backData("receiveInfo", 2003 ,"未授予摄像头|录制录音|文件读写权限");

}

});

}

}

}

摄像头开始预览,显示可见的内容

// 开始预览

@UniJSMethod

public void startPreview() {

try {

if(mCameraCaptureSession != null){

mCameraCaptureSession.stopRepeating();//停止之前的会话操作,准备切换到预览画面

mCameraCaptureSession.close();//关闭之前的会话

mCameraCaptureSession = null;

}

//创建预览请求

mPreviewCaptureRequestBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_PREVIEW);

// 设置自动对焦模式

mPreviewCaptureRequestBuilder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

//设置Surface作为预览数据的显示界面

mPreviewCaptureRequestBuilder.addTarget(mHolder.getSurface());

//创建相机捕获会话,第一个参数是捕获数据的输出Surface列表,第二个参数是CameraCaptureSession的状态回调接口,当它创建好后会回调onConfigured方法,第三个参数用来确定Callback在哪个线程执行,为null的话就在当前线程执行

mCameraDevice.createCaptureSession(Arrays.asList(mHolder.getSurface(),mImageReader.getSurface()),new CameraCaptureSession.StateCallback() {

@Override

public void onConfigured(CameraCaptureSession session) {

mCameraCaptureSession = session;

try {

//开始预览

mPreviewCaptureRequest = mPreviewCaptureRequestBuilder.build();

UniLogUtils.e("初始化开启预览");

//设置反复捕获数据的请求,这样预览界面就会一直有数据显示

mCameraCaptureSession.setRepeatingRequest(mPreviewCaptureRequest, null, null);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(CameraCaptureSession session) {

UniLogUtils.e("预览失败");

}

}, null);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

执行拍照功能

@UniJSMethod

public void takePhoto() {

UniLogUtils.e("准备开始拍照");

if (mCameraDevice == null) return;

try {

imageFileName = System.currentTimeMillis() + ".jpg";

//首先我们创建请求拍照的CaptureRequest

CaptureRequest.Builder captureBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_STILL_CAPTURE);

Context context = mUniSDKInstance.getContext();

if(context instanceof Activity){

Activity activity = (Activity)mUniSDKInstance.getContext();

//获取屏幕方向

int rotation = activity.getWindowManager().getDefaultDisplay().getRotation();

//一个 CaptureRequest 除了需要配置很多参数之外,还要求至少配置一个 Surface(任何相机操作的本质都是为了捕获图像),

captureBuilder.addTarget(mImageReader.getSurface());

// 自动对焦

//captureBuilder.set(CaptureRequest.CONTROL_AE_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

//// 自动曝光开

captureBuilder.set(CaptureRequest.CONTROL_AE_MODE, CaptureRequest.CONTROL_AE_MODE_ON);

\

//captureBuilder.set(CaptureRequest.CONTROL_AE_MODE, CaptureRequest.CONTROL_AE_MODE_OFF);

\

// 这里有个坑,设置闪光灯必须先设置曝光

if(flashState == 0){

captureBuilder.set(CaptureRequest.FLASH_MODE, CaptureRequest.FLASH_MODE_OFF);

}else{

captureBuilder.set(CaptureRequest.FLASH_MODE, CaptureRequest.FLASH_MODE_SINGLE);

}

//captureBuilder.set(CaptureRequest.CONTROL_AE_MODE, CaptureRequest.CONTROL_AE_MODE_ON_ALWAYS_FLASH);

//captureBuilder.set(CaptureRequest.FLASH_MODE, CaptureRequest.FLASH_MODE_SINGLE);

captureBuilder.set(CaptureRequest.JPEG_ORIENTATION, ORIENTATIONS.get(rotation));

mCameraCaptureSession.stopRepeating();

CameraCaptureSession.CaptureCallback captureCallback = new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureCompleted(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull TotalCaptureResult result) {

super.onCaptureCompleted(session, request, result);

UniLogUtils.e("拍照成功:");

backData("takePhotoSuccess", 200 ,"ok");

startPreview();

}

@Override

public void onCaptureFailed(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull CaptureFailure failure) {

super.onCaptureFailed(session, request, failure);

UniLogUtils.e("拍照失败:");

backData("takePhotoFail", 2001 ,"拍照操作失败");

}

};

UniLogUtils.e("开始拍照");

mCameraCaptureSession.capture(captureBuilder.build(), captureCallback, null);

}

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

二、IOS原生插件的实现

ios端相比较,更为简单

头部文件 .h

#import <AVFoundation/AVFoundation.h> #import "DCUniComponent.h" NS_ASSUME_NONNULL_BEGIN @interface LQCamera : DCUniComponent @end NS_ASSUME_NONNULL_END

.m文件实现固定函数,并返回一个组件

- (UIView *)loadView {

NSLog(@"插件日志:loadView");

return [UIView new];

}

初始化一些摄像头参数

- (void)viewDidLoad {

NSLog(@"插件日志:viewDidLoad");

self.session = [[AVCaptureSession alloc] init];

//创建一个AVCaptureMovieFileOutput 实例,用于将Quick Time 电影录制到文件系统

self.movieOutput = [[AVCaptureMovieFileOutput alloc]init];

//输出连接 判断是否可用,可用则添加到输出连接中去

if ([self.session canAddOutput:self.movieOutput])

{

[self.session addOutput:self.movieOutput];

}

//拿到的图像的大小可以自行设定

//AVCaptureSessionPresetHigh

//AVCaptureSessionPreset320x240

//AVCaptureSessionPreset352x288

//AVCaptureSessionPreset640x480

//AVCaptureSessionPreset960x540

//AVCaptureSessionPreset1280x720

//AVCaptureSessionPreset1920x1080

//AVCaptureSessionPreset3840x2160

self.session.sessionPreset = AVCaptureSessionPreset1920x1080;

//AVCaptureStillImageOutput 实例 从摄像头捕捉静态图片

self.imageOutput = [[AVCaptureStillImageOutput alloc]init];

//配置字典:希望捕捉到JPEG格式的图片

self.imageOutput.outputSettings = @{AVVideoCodecKey:AVVideoCodecJPEG};

if ([self.session canAddOutput:self.imageOutput]) {

[self.session addOutput:self.imageOutput];

}

\

self.device = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo];

NSError * error = nil;

self.input = [AVCaptureDeviceInput deviceInputWithDevice:self.device error:&error];

if (self.input) {

[self.session addInput:self.input];

}else{

NSLog(@"Input Error:%@",error);

}

\

//预览层的生成

self.previewLayer = [[AVCaptureVideoPreviewLayer alloc] initWithSession:self.session];

// 直接取用本组件的bounds来做定位,因为本组件的bounds是uniapp传过来的css宽高设置过的

self.previewLayer.frame = self.view.bounds; //预览层填充视图

\

// AVLayerVideoGravityResizeAspectFill 等比例填充,直到填充满整个视图区域,其中一个维度的部分区域会被裁剪

// AVLayerVideoGravityResize 非均匀模式。两个维度完全填充至整个视图区域

// AVLayerVideoGravityResizeAspect 等比例填充,直到一个维度到达区域边界

self.previewLayer.videoGravity = AVLayerVideoGravityResizeAspectFill;

[self.view.layer addSublayer:self.previewLayer];

\

[self.session startRunning];

}

一些固定的标注写法

/// 前端更新属性回调方法

/// @param attributes 更新的属性

- (void)updateAttributes:(NSDictionary *)attributes {

// 解析属性

if (attributes[@"showsTraffic"]) {

//_showsTraffic = [DCUniConvert BOOL: attributes[@"showsTraffic"]];

}

}

\

/// 前端注册的事件会调用此方法

/// @param eventName 事件名称

- (void)addEvent:(NSString *)eventName {

if ([eventName isEqualToString:@"mapLoaded"]) {

}

}

\

/// 对应的移除事件回调方法

/// @param eventName 事件名称

- (void)removeEvent:(NSString *)eventName {

if ([eventName isEqualToString:@"mapLoaded"]) {

}

}

ios端回调原生方法

// 返回给前端的信息回调

// 向前端发送事件,params 为传给前端的数据 注:数据最外层为 NSDictionary 格式,需要以 "detail" 作为 key 值

- (void) returnFunc:(NSString *) func returnCode:(NSNumber *)code returnMess:(NSString *) message{

NSString *imgUrl = self.imagePath ? self.imagePath : @"";

NSString *vioUrl = self.videoPath ? self.videoPath : @"";

\

[self fireEvent:func params:@{@"detail":@{@"code":code,@"message":message,@"videoPath":vioUrl,@"imagePath":imgUrl}} domChanges:nil];

}

拍照、录像[开始、停止]、闪光灯切换、摄像头镜头切换、设置水印内容等功能接口

// 下列为暴露出来的方法列表 START

// 通过 WX_EXPORT_METHOD 将方法暴露给前端

UNI_EXPORT_METHOD(@selector(openFlash))

// 开启闪光灯

- (void)openFlash {

[self setFlashMode:AVCaptureFlashModeOn];

}

\

UNI_EXPORT_METHOD(@selector(closeFlash))

// 关闭闪光灯

- (void)closeFlash {

[self setFlashMode:AVCaptureFlashModeOff];

}

\

UNI_EXPORT_METHOD(@selector(autoFlash))

// 自动闪光灯

- (void)autoFlash {

[self setFlashMode:AVCaptureFlashModeAuto];

}

\

UNI_EXPORT_METHOD(@selector(openFront))

// 切换前置摄像头

- (void)openFront {

[self switchCamer:AVCaptureDevicePositionFront];

}

\

UNI_EXPORT_METHOD(@selector(openBack))

// 切换后置摄像头

- (void)openBack {

[self switchCamer:AVCaptureDevicePositionBack];

}

\

// 通过 WX_EXPORT_METHOD 将方法暴露给前端

UNI_EXPORT_METHOD(@selector(takePhoto:))

// 拍照

- (void)takePhoto:(NSDictionary *)options {

// options 为前端传递的参数

NSLog(@"IOS收到开始拍照请求");

//获取连接

AVCaptureConnection *connection = [self.imageOutput connectionWithMediaType:AVMediaTypeVideo];

\

//程序只支持纵向,但是如果用户横向拍照时,需要调整结果照片的方向

//判断是否支持设置视频方向

if (connection.isVideoOrientationSupported) {

//获取方向值

connection.videoOrientation = [self currentVideoOrientation];

}

\

//定义一个handler 块,会返回1个图片的NSData数据

id handler = ^(CMSampleBufferRef sampleBuffer,NSError *error)

{

if (sampleBuffer != NULL) {

NSData *imageData = [AVCaptureStillImageOutput jpegStillImageNSDataRepresentation:sampleBuffer];

UIImage *image = [[UIImage alloc]initWithData:imageData];

[self returnFunc:@"takePhotoSuccess" returnCode:@200 returnMess:@"拍照成功"];

//重点:捕捉图片成功后,将图片传递出去

[self saveImage:image];

}else

{

NSLog(@"保存出错NULL sampleBuffer:%@",[error localizedDescription]);

}

};

//捕捉静态图片

[self.imageOutput captureStillImageAsynchronouslyFromConnection:connection completionHandler:handler];

}

UNI_EXPORT_METHOD(@selector(addWaterText:))

// 添加水印

- (void)addWaterText:(NSDictionary *)options{

NSLog(@"接收到水印内容:%@",options);

if(options[@"time"]){

self.timeStr = options[@"time"];

}

if(options[@"date"]){

self.dateStr = options[@"date"];

}

if(options[@"week"]){

self.weekStr = options[@"week"];

}

if(options[@"address"]){

self.addressStr = options[@"address"];

}

if(options[@"remark"]){

self.remarkStr = options[@"remark"];

}

if(options[@"logo"]){

self.logoStr = options[@"logo"];

}

}

\

// 停止录制

UNI_EXPORT_METHOD(@selector(stopRecord))

- (void)stopRecord {

NSLog(@"停止录像");

[self.movieOutput stopRecording];

}

// 开始录制

UNI_EXPORT_METHOD(@selector(startRecord))

- (void)startRecord {

NSLog(@"开始录像");

// 获取当前视频捕捉连接信息,用于捕捉视频数据配置一些核心属性

AVCaptureConnection * videoConnection = [self.movieOutput connectionWithMediaType:AVMediaTypeVideo];

//判断是否支持设置videoOrientation 属性。

if([videoConnection isVideoOrientationSupported])

{

//支持则修改当前视频的方向

videoConnection.videoOrientation = [self currentVideoOrientation];

}

//判断是否支持视频稳定 可以显著提高视频的质量。只会在录制视频文件涉及

if([videoConnection isVideoStabilizationSupported])

{

videoConnection.enablesVideoStabilizationWhenAvailable = YES;

}

AVCaptureDevice *device = self.input.device;

//摄像头可以进行平滑对焦模式操作。即减慢摄像头镜头对焦速度。当用户移动拍摄时摄像头会尝试快速自动对焦。

if (device.isSmoothAutoFocusEnabled) {

NSError *error;

if ([device lockForConfiguration:&error]) {

device.smoothAutoFocusEnabled = YES;

[device unlockForConfiguration];

}else

{

//[self.delegate deviceConfigurationFailedWithError:error];

}

}

//查找写入捕捉视频的唯一文件系统URL.

//self.outputURL = [self uniqueURL];

NSLog(@"开始录像2");

//在捕捉输出上调用方法 参数1:录制保存路径参数2:代理

[self.movieOutput startRecordingToOutputFileURL:[self outPutFileURL] recordingDelegate:self];

}

// 下列为暴露出来的方法列表 END

到此一款包含Android+IOS两端的Uniapp原生插件完成

附上链接:前往下载插件和demo实例

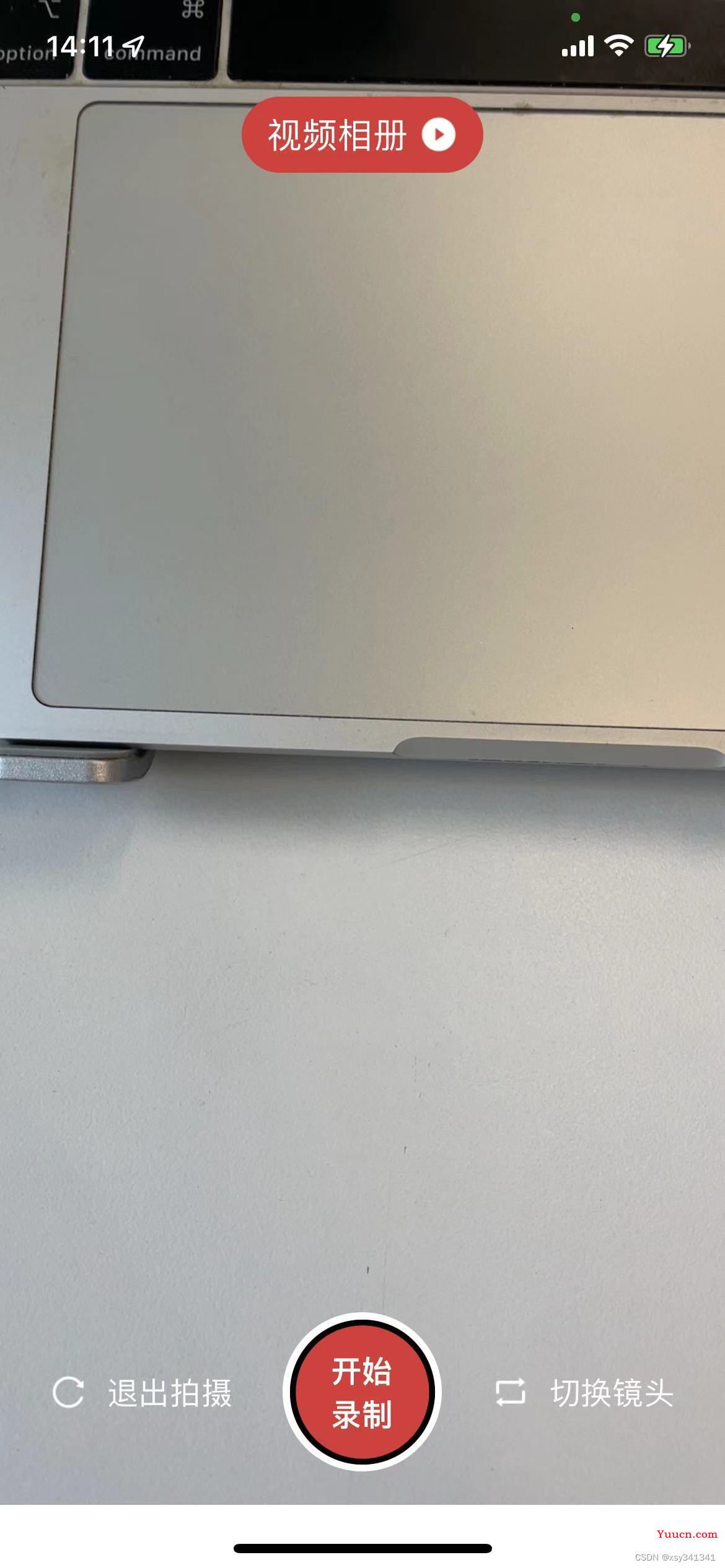

效果图:

https://juejin.cn/post/7107058762673815566

如果对您有所帮助,欢迎您点个关注,我会定时更新技术文档,大家一起讨论学习,一起进步。